As artificial intelligence (AI) continues to dominate headlines and transform industries, it’s clear that the infrastructure supporting this technology must evolve too. With AI technologies now a critical part of business strategy, particularly in real estate and other data-intensive fields, data centers are facing new demands. But what exactly does this mean for data centers, and how are they adapting to the growing needs of AI?

The Rise of AI and Its Impact on Data Centers

AI has transitioned from a niche technology to a central component of many industries. From improving healthcare real estate to optimizing commercial investments, AI’s capabilities are vast. But these advancements don’t come without challenges—especially for data centers, which must now handle the immense computational power required for AI applications.

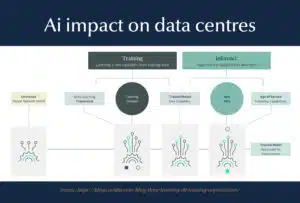

The AI boom has introduced two critical processes to data centers: training and inference. Training involves teaching a model to recognize patterns or perform tasks using large datasets. This process is power-intensive, requiring significant computational resources.

On the other hand, inference is the application of the trained model to new data, which often needs to happen in real-time, demanding both speed and accuracy.

To understand how data centers are adapting, we must first explore these two processes in more detail.

Training: The Backbone of AI

Training an AI model involves processing vast amounts of data to develop new capabilities. This is where the majority of computational power is consumed. Data centers that support AI training require robust infrastructure, often with high-performance computing (HPC) capabilities.

For example, modern data centers are evolving to include high-density rack systems that can handle the intense workloads AI demands. NVIDIA’s DGX H100 system, popular among AI developers, consumes around 10kW in just one system. Stack several of these together, and you’re looking at rack densities of 40-120kW—significantly higher than traditional setups.

This shift towards higher power densities necessitates innovations in cooling technology. Traditional air cooling methods, sufficient for typical data center operations, fall short when dealing with AI workloads. Developers are turning to advanced solutions like liquid, immersion, and direct-to-chip cooling to efficiently manage the heat generated by these powerful systems.

Inference: The Real-Time Application of AI

While training sets the foundation, inference is where AI comes to life. Inference involves using a trained model to make predictions or decisions based on new data. This process needs to happen quickly, often in real-time, which means latency becomes a critical factor.

Unlike training, which can take place in remote locations with less concern for latency, inference typically requires proximity to end-users to ensure seamless service delivery. This is why data centers supporting AI inference are often located near population centers or strategically placed in co-location facilities.

For instance, AI applications in sectors like luxury retail or financial services need to operate with minimal delay to meet customer expectations. The demand for low-latency services pushes data centers to optimize their infrastructure, balancing the need for speed with the challenges of managing high-performance systems.

The Power Challenge: Scaling Up for AI

One of the biggest challenges data centers face in supporting AI is power management. AI-ready data centers are expected to operate at much higher power levels than their predecessors. While traditional data centers may operate at 5-50MW, AI-centric facilities could see power demands of 200-300MW or more.

Meeting these demands requires not only substantial power infrastructure but also a reliable and sustainable energy supply. As AI continues to grow, data centers must explore alternative energy sources and more efficient power delivery methods to remain viable.

This shift also impacts real estate and infrastructure investments. As seen in the recent U.S. real estate market outlook, regions with access to renewable energy, favorable land prices, and stable climate conditions are becoming prime locations for new data center developments.

Cooling: Managing the Heat of High-Performance AI

With greater computational power comes more heat, making cooling a critical aspect of modern data center design. As previously mentioned, traditional cooling methods are no longer sufficient for the high densities associated with AI workloads.

Liquid cooling, immersion cooling, and direct-to-chip cooling are emerging as viable solutions for managing the heat generated by powerful AI processors. These technologies offer better heat transfer capabilities, ensuring that data centers can maintain optimal operating temperatures even under the most demanding conditions.

The push towards advanced cooling methods also aligns with broader sustainability goals. By improving cooling efficiency, data centers can reduce their overall energy consumption, contributing to lower operational costs and a smaller carbon footprint.

Looking Ahead: The Future of AI-Driven Data Centers

The continued proliferation of AI will undoubtedly drive further demand for advanced data center infrastructure. For investors and developers, this presents both challenges and opportunities. Navigating the complexities of AI-ready data centers requires a deep understanding of the technology, as well as strategic planning to ensure that facilities can meet the needs of tomorrow.

Partnering with experienced service providers like Ironsides Group can unlock significant value across the data center lifecycle, from site selection and due diligence to leasing and operation. As AI technologies evolve, so too must the facilities that support them. By staying ahead of these trends, businesses can ensure they remain competitive in an increasingly AI-driven world.

For those looking to expand or enter the data center market, it’s essential to consider not just the current demands of AI, but also its future trajectory. The next generation of data centers will need to be more powerful, more efficient, and more strategically located than ever before.

Conclusion

AI’s rapid growth is reshaping the landscape of data centers, pushing the boundaries of what’s possible in terms of power, cooling, and performance. As we look to the future, the evolution of data centers will play a crucial role in enabling the continued advancement of AI technologies.

Whether you’re an investor, developer, or operator, understanding these trends and preparing for the challenges ahead will be key to success in this dynamic field. Explore Ironsides Group’s services to learn more about how we can help you navigate the complexities of AI-driven data centers and maximize your investment in this rapidly evolving industry.

For further insights, consider reading our recent analysis on U.S. economic growth and how macroeconomic factors could impact your real estate strategy in the coming years.